Magic Leap

Platform Redesign

For more than three years at argodesign, I was part of a small design group externally leading deep user experience development of Magic Leap's native platform leading up to the release of the ML2.

As the team's Motion Director, I led exploration and refinement of unique spatial interactions, I defined a set of brand expression global Motion principles (touching everything from the launch menu to hardware LEDs), and I helped to create a true spatial-first operating system. The ML2 implements much of our work and disceminated thinking from our designs can be found in MR products for Meta and Apple.

Role: Motion Director

A quick-access guide for developers and motion designers

Over the course of dozens of projects, explorations, and user flows, I devised a set of motion principles that expressed well across the broad spectrum of spatial computing, but also held up when translated into two dimensions and applied to system protocols.

⇢ Theory

↳ Timing Guides

↳ Bezier References

↳ Millisecond Values

⇢ "When to Apply"

I refined the thinking and theory behind the practice and wrote a concise set of principles, timing guides, bezier curves, and to-the-millisecond values to serve as a baseline and be applied and expanded on across the platform and hardware.

Prism Systems

UI Explorations

I led motion development and visual exploration for a redesign of “Prisms” (essentially a spatial ‘window’). Through this process, I created the 'Anima' system, a new set of forms and functions that dramatically reduced friction and created a more immersive interaction model.

A radical departure from the maximalist prism designs of the ML1, in which all manipulation came through invoking a contextual menu, I wanted to re-imagine the prism as a directly manipulated minimal container, invisible until called on.

With a somewhat eccentric After Effects rig, devised for quick iteration, I explored hundreds of models and user flows to surface a more sophisticated, subtler approach to the most ubiquitous tools on the device while maintaining and often enhancing prism utility and usability.

Controls explainer loop for quick 1n-headset reference

Anima prism rotation

Anima prism direct-manipulate movement

Anima prism resize

Anima bi-manual scaling

Removing the old design's box outlines and thick, purple corners, I created a translucent volume and contextual outlines invoked only under a user's focus. The lines themselves now subtly guide the user through their interaction options and disappear when their job is done.

Anima scale select

Anima cursor + bound indicator

Anima cursor + bounds interaction

Anima cursor + bound indicator

Lumin

System Level Assistant

Lumin Assistant was a placeful, persistent, system-level MR assistant meant to intuitively follow and help users. Easily invoked and situationally aware, it served as a traditional voice assist as well as a guide through the new paradigms of spatial computing.

Spatially, the entity was a balance of brand look & feel, technical limitations, and de-anthropomorphism.

The spatial style I presented eventually evolved into a more traditional voice-only OS feature with a visual overlay, which I then helped to stylize.

Look development (After Effects + Element 3D)

Proof of concept animation (After Effects + Element 3D)

Voice-only stylization reference (After Effects)

Destinations: Office (After Effects + Element 3D)

Destinations

Persistent Placefulness

A global, persistently placeful layer over the real world can be a confusing concept. Early on in my work for Magic Leap there was an immediate need to illustrate to investors and onboarding personal how a spatial metaverse might work.

With my work on 'Destinations', the persistent world arm of the Lumin platform, I created a series of videos clearly showing how a location-based experience might work and be utilized by businesses like offices, retail, hospitality, and warehouses.

Destinations: Retail (After Effects + Element 3D)

Destinations: Brewery (After Effects + Element 3D)

Gestures

Conrollerless Interaction

Exploring the possibilities of a control-free mixed reality experience, I helped to flesh out, test, and ship a gesture-based interaction model, within the restrictions of the ML2 hardware, that has carried into newer systems and helped to define the current industry standard.

Additionally, I shot, composited, and animated assets placed throughout the operating system to teach users how to utilize the gesture functions.

A design story I shot & produced about our gesture system

System assets illustrating use (After Effects)

Hand-tracking explorations (After Effects)

Applying the Unified Motion Language System

LEDs

Hardware LED Animations

Not just a collection of blinking dots, my LED system represents months of research and application of my Unified Motion Language System in developing the ML2’s hardware’s feedback and status communication. Limited to five LED lights on the computer, and one each on the headset and controller. Creating a consistent language that related to the brand expression, it also imbued personality and utility in the device with an absolute minimum fidelity.

Prism Movement

Friction Free

A Frictionless Interaction Model

Working closely with a select team of engineers and Creative Technologists over more than two years and countless iterations, I distilled a complex paradigm of object and user interactions into a simple set of rules through a series of brief, digestiable, sharable animated clips.

Designed in After Effects with Element 3D for fast iteration.

Surface Snapping

Prism Swapping

Prism Tabs

Prism Snapping

Prism Scale

Surface Occlusion

Minimize

Passthrough

Projects

USAA x NFLDirection

ApolloMotion Language System

Patrón TequilaBrand Anthem

AppleTitle Design

Realtor.comMotion Design

T-MobileExperiential

Design StoriesVideo Production

MetaUI Vision

CitiMarketing

EtihadFuture Vision

Sirius XMVision Exploration

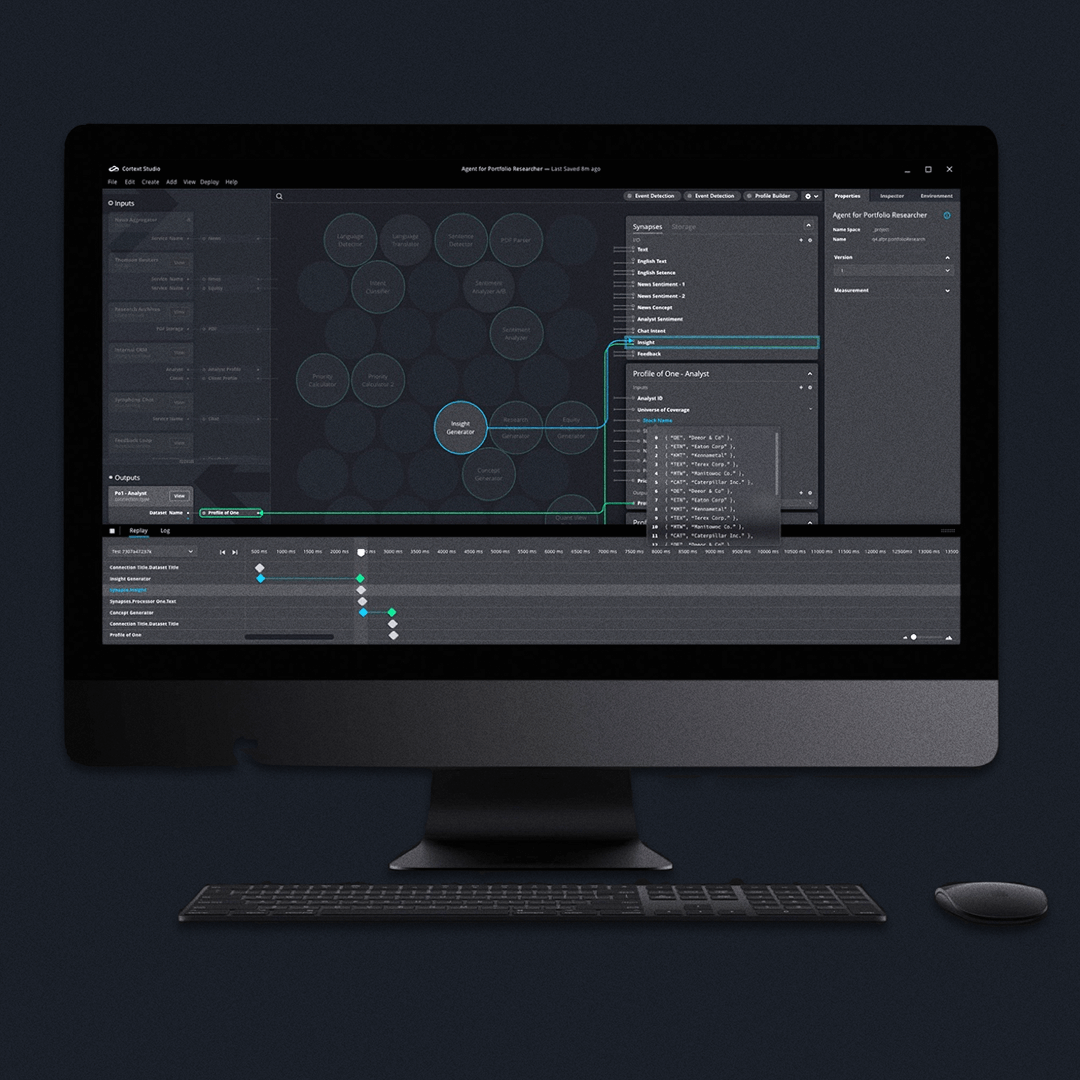

Cognitive ScaleDesign Story

Magic Leap, Kinetic TypeBrand Identity

Under ArmourMotion Design

VRBO x FödaIdentity

DXCProduct Video

NetflixProduct Design

DellProduct Videos

Patrón Cocktail LabProduct Video

WaldoOrientation Animation

WinkProduct Design

Finger on the Pulse NYCIllustration

Canvas by DellDocumentary Series